If you follow trends in the software development world, you know that serverless architecture is becoming quite popular. Before diving into this new technology, however, it is wise to consider whether there will be anything gained by abandoning a traditional server. Is it worth using a serverless platform for your API gateway, or is it just a fad that will soon pass?

What Is “Serverless”?

A traditional server can be described as a virtual machine listening for requests on port 80. This has been the standard of the internet for quite some time. On the other hand, the serverless platform is one of many cloud computing options which attempts to take the pain out of scalability. Rather than running 24/7, a serverless app takes a different approach: it waits for requests to come in and fires up as many instances as needed to handle the requests, shutting down once the work is complete.

Using a platform like Microsoft Azure Functions or AWS Lambda Functions (a product of Amazon Web Services), you may not have to think about the hardware at all since this is being managed for you magically within the walls of a remote data center. This is the ultimate expression of “auto scale”. If no one is using the service then there is no charge. On the other hand, if your app becomes an overnight sensation, scalability is already taken care of. There is also the added benefit of not having to worry about operating systems within a serverless framework; the platform is abstracted in such a way as to allow developers to focus on writing their web applications.

The Performance Test

An interesting way to see how well serverless computing performs is to put it to the test. We wrote a simple javascript server that generates a random number 100,000 times for each request that is made to the server. It does so asynchronously and in parallel, to help prevent the server from locking up and hanging requests during the processing. We chose Microsoft Azure as our cloud vendor for this test.

It should be noted that nodejs does not normally do this sort of thing very well. It is not intended to handle long-running tasks that are processor intensive. So, in this case, we are really taxing the server to highlight the limitations.

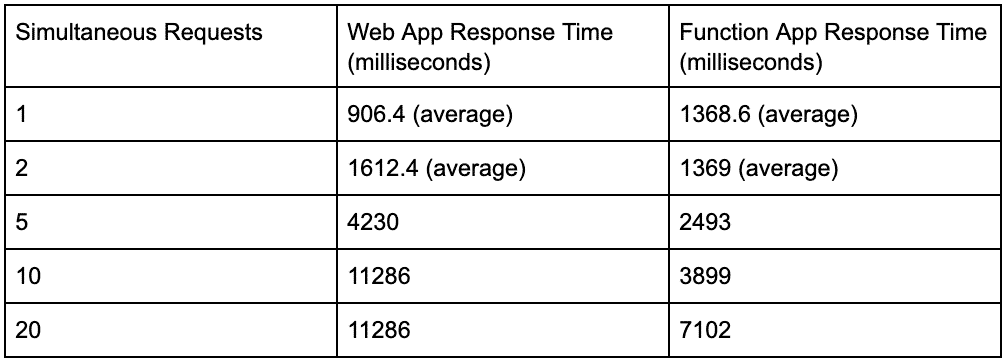

We started out by comparing an Azure web app (regular server) with an Azure function app (serverless platform). The response times shown below are the amount of time it took for allsimultaneous requests to return:

You will see that for heavy processing tasks, the serverless platform quickly gets ahead. There are two important things to note about this test. The first is that, to be fair, we increased the hardware plan on the web app to a decent level: a $55.80 monthly plan with 1.75 GB of memory and dedicated hardware.

The second thing to note is that initially, the traditional server was beating the serverless app across the board. This was until we tried 50 requests all at once. It seems that the large number of requests caused the function app to scale up the hardware. After this, the function app started beating the traditional server. This is significant because we got upgraded hardware for no additional cost. The results above are what we got after hitting the function app heavily, causing it to upgrade internally.

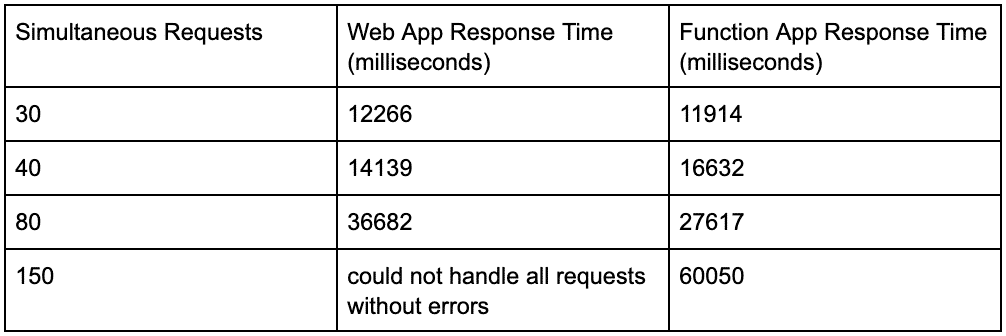

Because the traditional server started hanging up when making over 20 requests per second, we increased the web app instance count to 3, making it a $167.4 monthly plan. We then ran some heavier stress tests:

It’s important to remember that these tests do not simulate a real-life scenario. Since all requests are coming from the same IP address, it’s quite possible that Azure is limiting the number of resources dedicated to these requests. It’s clear, though, that at a certain point Azure Functions takes over as the better option for high-demand scenarios, especially considering the lower cost.

The function app would have to be receiving 35 – 40 requests per second, 24/7, 7 days a week for the operational costs to be more than the web app, which is unlikely. The traditional server also can’t scale up as quickly to handle 150 requests per second without additional instances, making the cost and effort higher to achieve that goal.

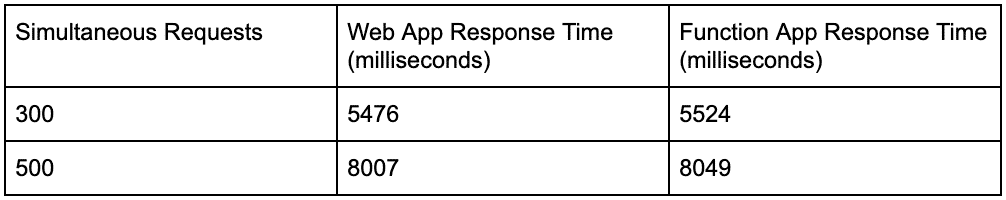

For one final test, we slimmed down the workload so that instead of generating a random number 100,000 times, each request simply generates 1 random number and returns the value. This is quite a light operation but is much closer to what an actual server would be doing. We got the following results, showing that for simple operations both platforms are roughly equivalent:

Conclusion

Whether you choose a web or function app depends on what you are trying to achieve. What these tests show is that the more you move towards heavier processing and longer-running tasks, the more you’re going to need a serverless platform. If the purpose of a server is simply to hand out static pages and process basic API requests, then perhaps an Azure Web App (or AWS Elastic Beanstalk app) is a good option as it is a great, scalable solution. However, if your server processes images or long-running requests, consider a serverless option like Azure Functions or AWS Lambda.

An alternative is a hybrid approach: handle your main requests (such as page loads and standard API requests) with a traditional server, and offload the heavy tasks to a serverless platform to prevent your web app from slowing down. We’ve found this solution to be quite effective as it allows developers to build on a more familiar architecture while also leveraging the power of a limitless framework.